Emerging Approaches for Governing AI

We are in a period of rapid innovation in the development and application of artificial intelligence (AI).

Advances in generative AI—which can produce images and text from brief verbal prompts—captured the public’s attention in late 2022. Rapidly advancing AI tools known as large language models (LLMs) enable deep analysis and the generation of new images, text, software code, and even ideas.

These technologies are entering the marketplace and are already being used by researchers to advance scientific discovery. Emerging AI technologies have the potential to accelerate research and development in medicine, agriculture, transportation, education, energy, and more.

But AI also raises understandable concerns that need to be addressed on several fronts. How can we ensure that these technologies are designed and used responsibly—and that the benefits of AI reach everyone? What principles and guardrails should guide the development and use of AI?

Initial Steps for AI Policymaking

Government has long played a role protecting consumers through laws and regulations. AI developers, the larger business community, organizations, institutions, government, and consumers would all benefit from public policies that foster innovation through responsible AI while providing meaningful protections.

The federal government has taken initial steps to develop AI policies. In early 2023, the National Institute of Standards and Technology (NIST) released the voluntary AI Risk Management Framework and launched the Trustworthy and Responsible AI Resource Center in March.

In November 2023, the Biden Administration announced the creation of the U.S. AI Safety Institute (USAISI), which, according to the Department of Commerce, facilitates “the development of standards for safety, security, and testing of AI models, develop standards for authenticating AI-generated content, and provide testing environments for researchers to evaluate emerging AI risks and address known impacts.”

In June 2023, Senate Majority Leader Chuck Schumer presented a framework for Congress to develop regulations for AI. The framework does not endorse any specific legislation, but it calls for prioritizing key goals, such as supporting security and innovation. Throughout the fall of 2023, the Senate also convened a series of AI Insight Forums to help educate lawmakers about AI.

In July 2023, seven leading AI companies including Microsoft made voluntary commitments developed by the Biden-Harris Administration to advance safe, secure, and trustworthy AI. Subsequently, several other companies agreed to the commitments as well. In addition, in February 2024, 20 leading AI and platform companies announced their participation in a new Tech Accord to Combat Deceptive Use of AI in 2024 Elections.

The federal government has also taken initial steps to expand access to AI resources through the creation of a National Artificial Intelligence Research Resource (NAIRR). The most robust uses of AI require access to tremendous computational power and data resources, which may be unavailable due to budgetary and technological constraints. The aim of the NAIRR is to provide AI computing power and data access to qualifying organizations that would otherwise be unable to fully benefit from AI. The National Science Foundation (NSF) launched an interagency NAIRR pilot program in January 2024, but legislation and funding are needed to realize the full vision of the NAIRR.

As a first foray into AI policymaking in his second term, President Trump rescinded a 2023 Biden Administration executive order on AI and issued his own AI executive order, which calls for the development of an AI Action Plan within 180 days. That Plan is expected in spring 2025.

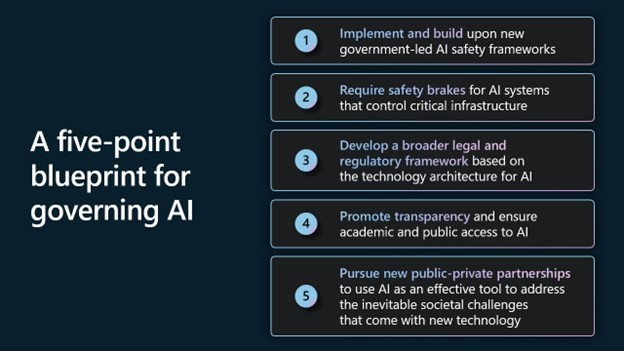

Microsoft’s Five-Point Blueprint for Governing AI

In May 2023, Microsoft released a white paper that includes a five-point blueprint for addressing several current and emerging AI issues through public policy, law, and regulation. The blueprint was presented with the recognition that it would benefit from a broader, multi-stakeholder discussion and require deeper development.

We encourage you to access the white paper for a detailed discussion about each of the five points in the blueprint. The white paper also includes a section entitled, “Responsible by Design: Microsoft’s Approach to Building AI Systems that Benefit Society.”

Microsoft also committed to supporting innovation and competition through a set of AI Access Principles. Under these principles, the company will make AI tools and development models widely available as well as provide flexibility for developers to use Microsoft Azure for AI innovations. The principles also include commitments to support AI skilling and sustainability.

Microsoft Principles and Recommendations

Microsoft has acknowledged that while new laws and regulations are needed, tech companies need to act responsibly. To this end, the company has adopted six AI principles to guide its approach to this powerful technology:

- Fairness

- Reliability and Safety

- Privacy and Security

- Inclusiveness

- Transparency

- Accountability

Microsoft has also endorsed the development of policy guardrails built around the following goals:

- Ensuring that AI is built and used responsibly and ethically.

- Ensuring that AI advances international competitiveness and national security.

- Ensuring that AI serves society broadly, not narrowly.

Voices for Innovation will support public policies that help achieve these goals. We encourage our members to explore this issue in greater detail using the resources below. Technology professionals, including VFI members, can bring their valuable expertise to discussions about AI—with family, friends, colleagues, and policymakers.

We will continue to listen closely to this discussion and will keep our membership informed about proposed AI policies as they are developed in Washington and state capitals.

Resources

Removing Barriers to American Leadership in Artificial Intelligence

The White House

Responsible AI Transparency Report

Microsoft – May 2024

A Tech Accord to Combat Deceptive Use of AI in 2024 Elections

Microsoft’s AI Access Principles: Our commitments to promote innovation and competition in the new AI economy

Brad Smith, Microsoft on the Issues

Meeting the moment: combating AI deepfakes in elections through today’s new tech accord

Brad Smith, Microsoft on the Issues

Our commitments to advance safe, secure, and trustworthy AI

Brad Smith, Microsoft on the Issues

Expanding Access to Artificial Intelligence through the NAIRR

Voices for Innovation Blog

Voluntary Commitments by Microsoft to Advance Responsible AI Innovation

SAFE Innovation Framework

Senate Majority Leader Chuck Schumer

How do we best govern AI?

Brad Smith, Microsoft on the Issues

Governing AI: A Blueprint for the Future

Microsoft White Paper

Reflecting on our responsible AI program: Three critical elements for progress

Natasha Crampton, Microsoft on the Issues

Meeting the AI moment: Advancing the future through responsible AI

Brad Smith, Microsoft on the Issues

AI Risk Management Framework

National Institute of Standards and Technology (NIST)

Responsible AI Principles and Approach

Microsoft AI

A conversation with Microsoft CTO Kevin Scott: What’s next in AI

Microsoft